Staying ahead of the curve in a rapidly changing compliance landscape is tough and new AI regulations can make it challenging to do business.

Staying ahead of the curve in a rapidly changing compliance landscape is tough and new AI regulations can make it challenging to do business.

Artificial intelligence is no longer a future concern, it’s today’s governance challenge. As AI systems become more deeply embedded in business operations, regulators across the United States are racing to establish frameworks that protect individuals, ensure fairness and preserve trust.

For security leaders, that means one thing: compliance complexity is about to grow.

Below, we’ve outlined the key AI security and privacy laws coming into effect in 2026, broken down by geography. Knowing what’s ahead gives you a head start on preparation and helps you mind what matters before enforcement begins.

Federal Outlook: The Framework Phase

At the national level, the U.S. has yet to enact a comprehensive AI or privacy law with a firm 2026 start date. Instead, we’re seeing guidance, frameworks and executive actions that pave the way for future regulation.

What to watch:

- The White House Executive Order on Safe, Secure, and Trustworthy AI (2023) continues to influence agency rule-making and standards.

- NIST AI Risk Management Framework (RMF) is becoming the de facto baseline for responsible AI practices. Expect increasing pressure for organizations to demonstrate RMF alignment during audits and vendor reviews.

- Federal agencies including the FTC, EEOC and CFPB have all issued warnings on AI bias, consumer protection and data handling, signals of stronger enforcement to come.

The takeaway

Federal law isn’t here yet, but accountability already is. Companies deploying AI must treat these frameworks as compliance prerequisites, not suggestions.

State-Level Regulations: The New Front Lines

States are leading the charge, each introducing distinct laws to govern AI development, deployment, and data use. Here are the most significant to watch in 2026:

Colorado – The Colorado AI Act (SB 205)

Effective: February 1, 2026Colorado became the first state to pass a comprehensive AI accountability law. It defines “high-risk” AI systems and requires:

- Impact assessments for systems influencing employment, education, finance, or healthcare

- Transparency disclosures to users

- Risk mitigation and bias monitoring obligations for developers and deployers

California – The AI Transparency Act (SB 942)

Effective: January 1, 2026California expands its privacy leadership into AI. The new law mandates:

- Clear notice when consumers interact with AI systems

- Documentation of AI functionality and data sources

- Disclosure requirements for generative and conversational AI platforms

Texas – The Responsible AI Governance Act (TRAIGA)

Effective: January 2026Texas focuses on accountability and governance, requiring:

- Documented AI lifecycle management

- Red-teaming, transparency reporting, and oversight for “high-impact” systems

- Annual internal reviews to validate compliance

New York State – The Responsible AI Safety and Education (RAISE) Act

Effective: January 1, 2026This bill targets “frontier” or “high-risk” AI models that could influence safety, financial systems, or civic operations. It introduces:

- Independent audits and incident reporting

- Safety plans and documentation of model intent

- Public transparency reports for large AI developers

Local & Municipal: New York City Leads the Way

NYC Local Law 144 – Automated Employment Decision Tools

In effect since 2023, continuing enforcement into 2026This law regulates the use of automated hiring and promotion tools. It requires organizations to:

- Conduct independent bias audits annually

- Notify candidates and employees when AI tools are used in decision-making

- Publish summaries of audit results for transparency

While not new, its continued enforcement and the expansion of similar policies in other cities marks a pivotal shift: municipalities are no longer waiting for federal action.

What’s next:

The NYC AI Action Plan (2023) sets the stage for additional oversight of how city agencies and contractors deploy AI, establishing a framework other municipalities are likely to follow by 2026.

Why This Matters: AI, Privacy and Data Security Converge

Even if your organization is not headquartered in one of these jurisdictional areas, it is likely that if your company does any business there, these rules will apply to you. This means most of these rules and guidelines will be applicable to most organizations.

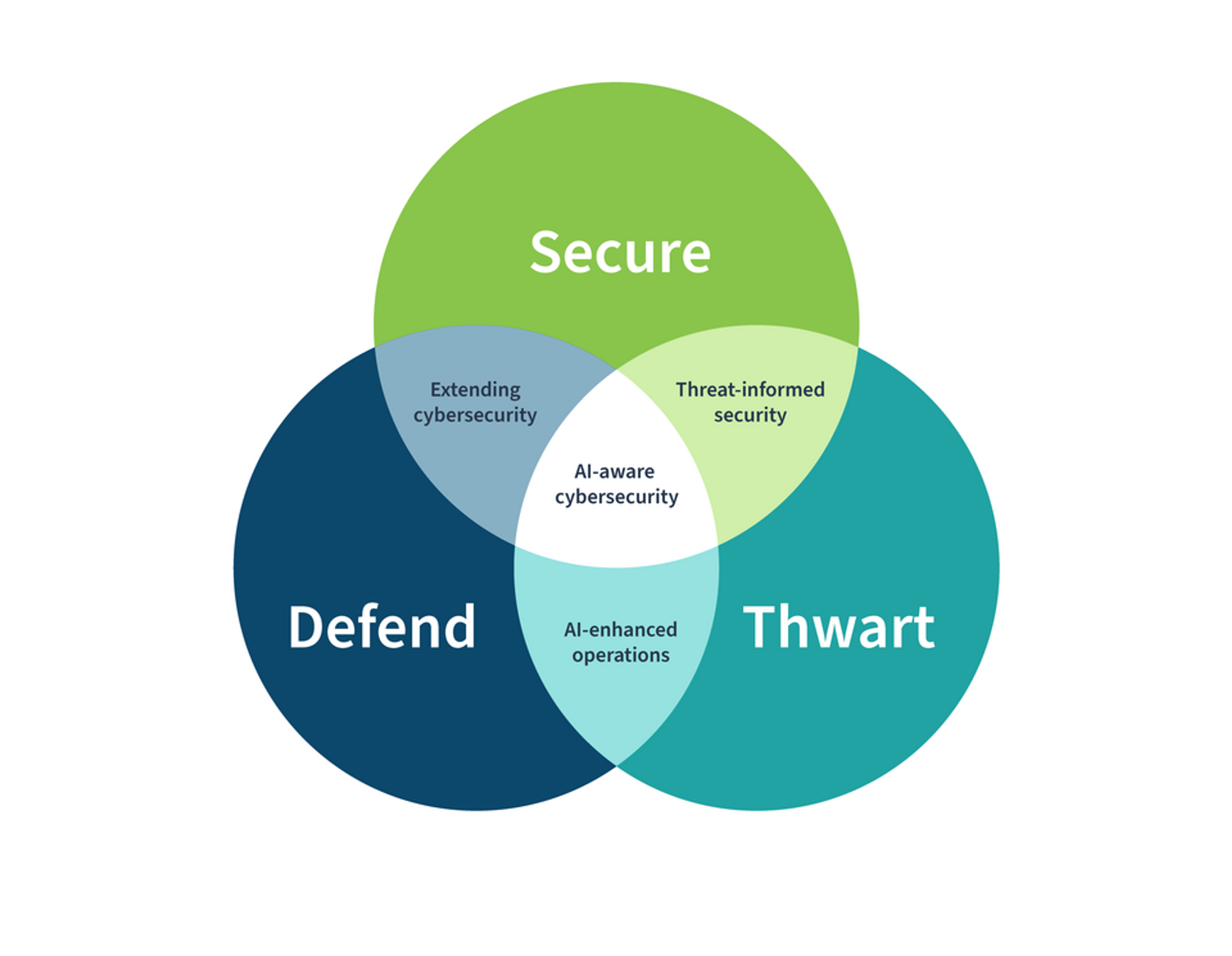

Every one of these laws ties back to a shared principle: AI systems can’t be trusted unless data is protected, traceable and governed intelligently. For CISOs, compliance officers, and data protection teams, 2026 isn’t just about checking regulatory boxes, it’s about building defensible systems that demonstrate control.

What leaders should do now:

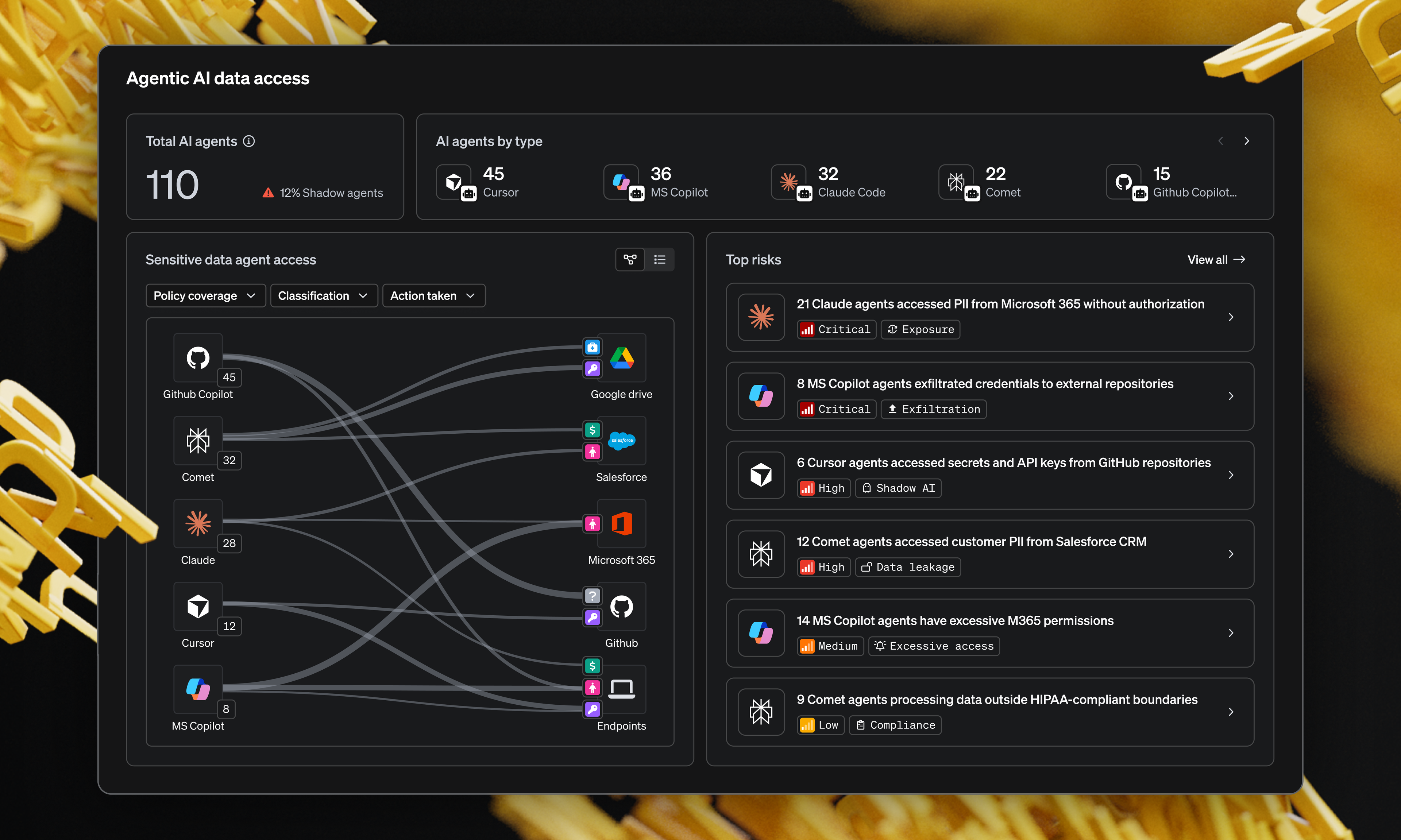

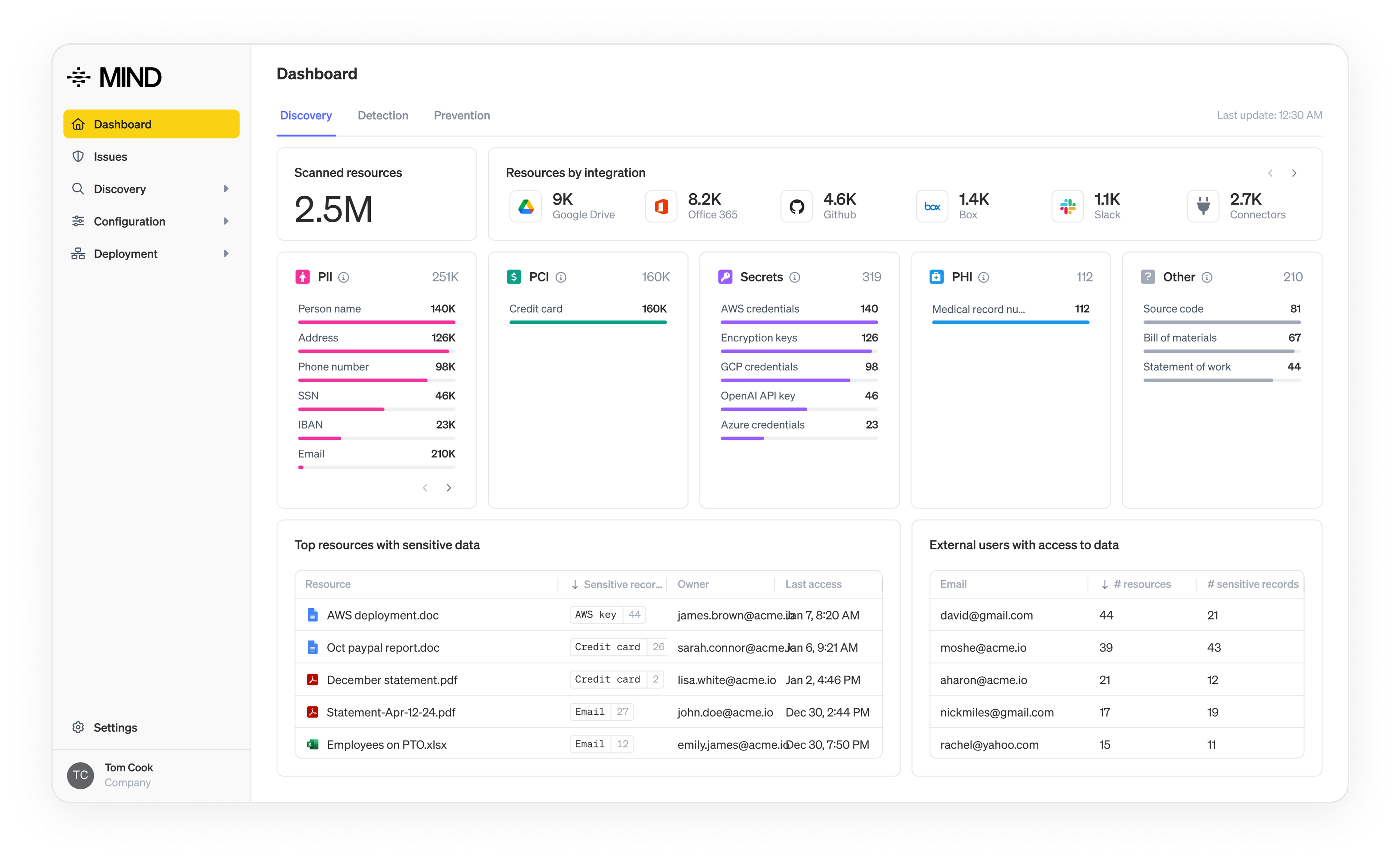

- Map your AI footprint: Know where AI systems exist across the organization.

- Document data flows: Understand what data AI models access, store and generate.

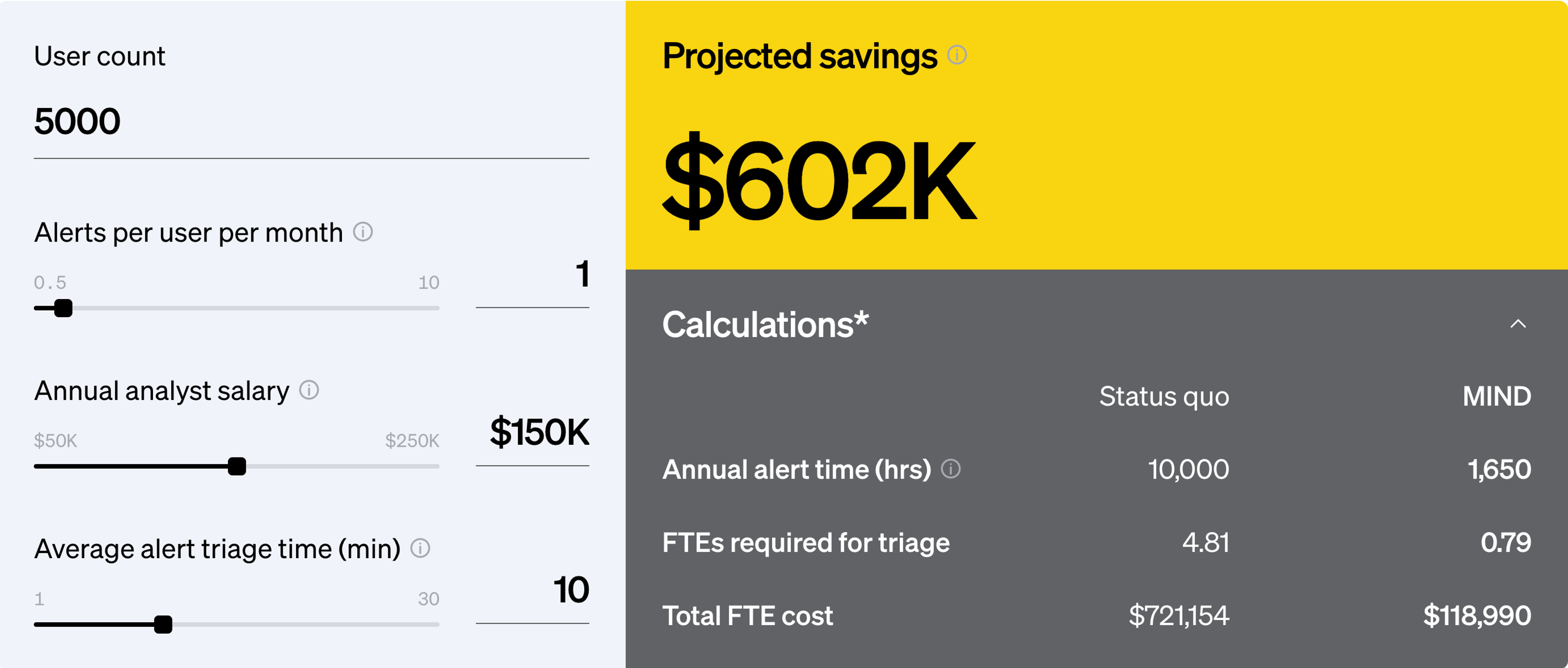

- Automate compliance evidence: Use intelligent DLP and classification to track sensitive data, AI usage and risk posture in real time.

Stay adaptive: Treat each state law as a building block toward a unified, responsible AI governance model.

MIND What Matters

Regulation will continue to evolve, but readiness doesn’t have to wait. MIND helps organizations stay ahead of AI-driven data risk with intelligent discovery, automated policy enforcement and real-time compliance visibility.

As AI transforms how we work, MIND ensures your organization stays compliant, secure and confident, automatically.

Ready to prepare for 2026? Let’s turn compliance complexity into clarity.