Agentic AI is transforming the enterprise.

Agentic AI is transforming the enterprise.

Autonomous agents now summarize, decide and act on behalf of humans. They move data across SaaS apps, endpoints and AI tools at machine speed. For security leaders, this creates a new reality: innovation depends on AI, but traditional data loss prevention wasn’t built for autonomous systems.

In fact, without the right controls, Agentic AI can be a data security nightmare.

The result is a widening gap between how fast data moves and how fast security can respond.

Why does legacy DLP fail in an agentic AI world?

Most DLP programs were designed for a human-first world.

Static rules.

Predictable workflows.

Manual review.

In an agentic environment, those assumptions no longer hold.

AI agents don’t pause for approval. They don’t follow linear paths. And they don’t generate neat, low-volume alerts that teams can triage by hand.

This leaves security teams with an impossible choice: slow AI adoption or accept blind spots.

Why does speed matter for Data Loss Prevention in AI environments?

Agentic AI changes the threat model.

Data can be accessed, transformed and shared in seconds. Risk compounds quickly. And when detection and response lag behind execution, prevention becomes a post-mortem exercise.

At AI speed, security has to move before humans can.

What does data-centric AI security look like?

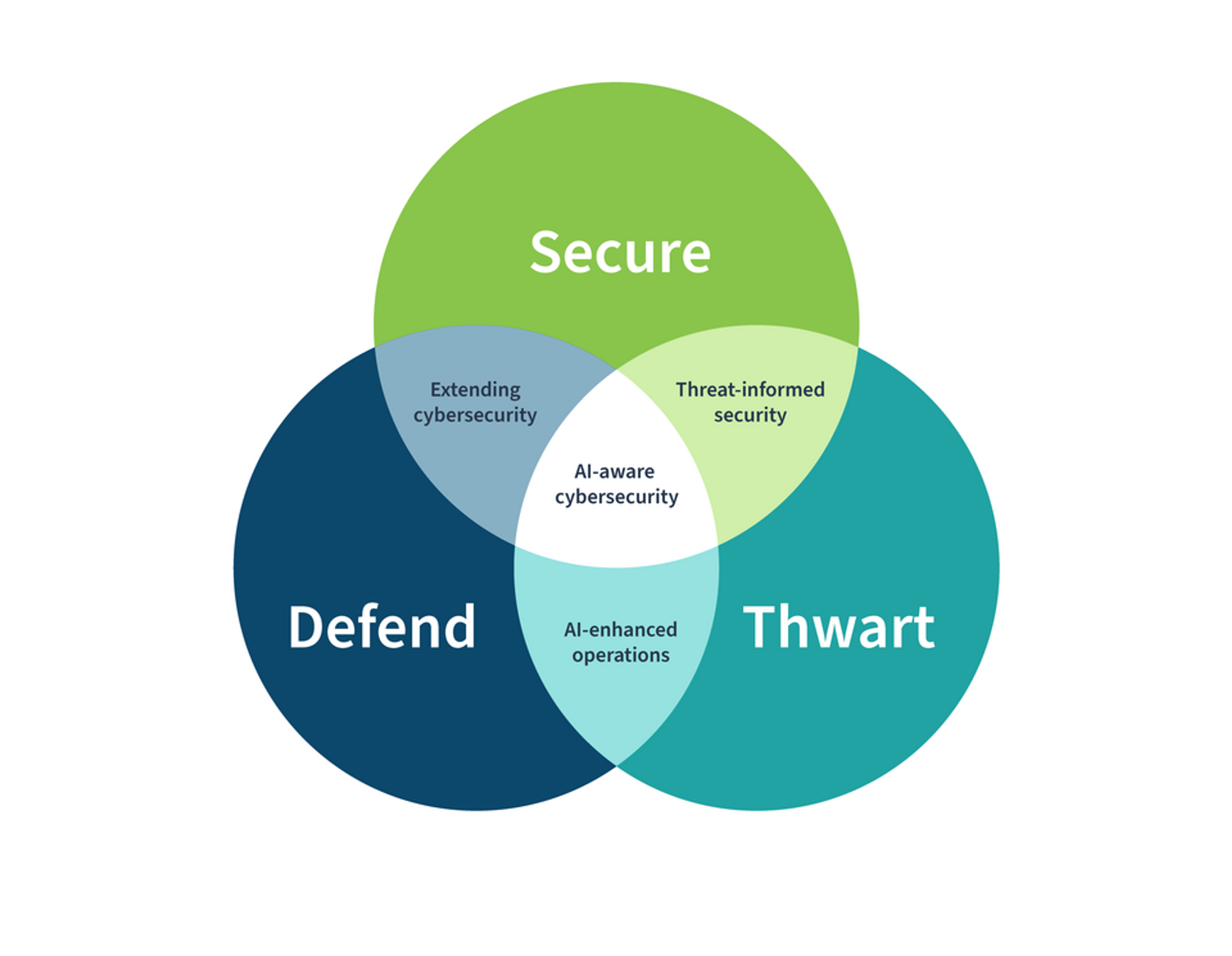

As organizations race to adopt agentic AI, new security categories have emerged. While each plays a role, none are sufficient on their own to secure AI-driven business outcomes.

AI Security Posture Management (AI-SPM) helps teams understand how AI systems are configured and governed. AI runtime security monitors prompts, outputs and behavior once AI is already operating. Both are important, but both focus on the AI systems themselves.

What they don’t address is the most critical question: should this AI have access to this data in the first place?

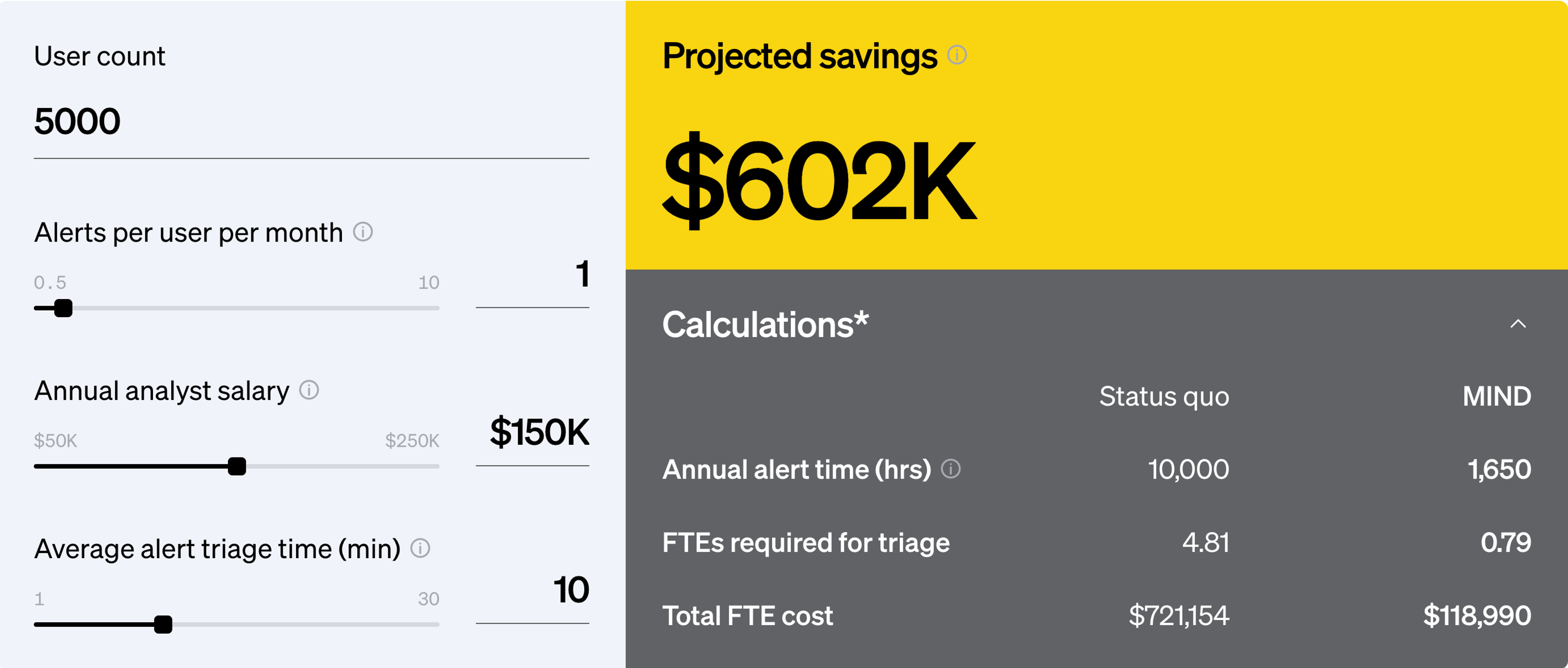

MIND takes a data-centric approach to AI security, ensuring sensitive information is understood, governed and protected before any agentic AI can access or act on it. Instead of reacting to model behavior or configuration drift, MIND secures the data layer that all AI systems depend on.

This makes DLP fundamental to AI security. Without data-centric controls, posture and runtime protections can only respond after risk is introduced.

By putting data security at the center of AI adoption, MIND enables organizations to innovate with confidence and build a secure future for agentic AI.

That’s how security keeps up with AI.

How can you secure agentic AI without slowing innovation?

To safely adopt agentic AI, organizations need three core capabilities:

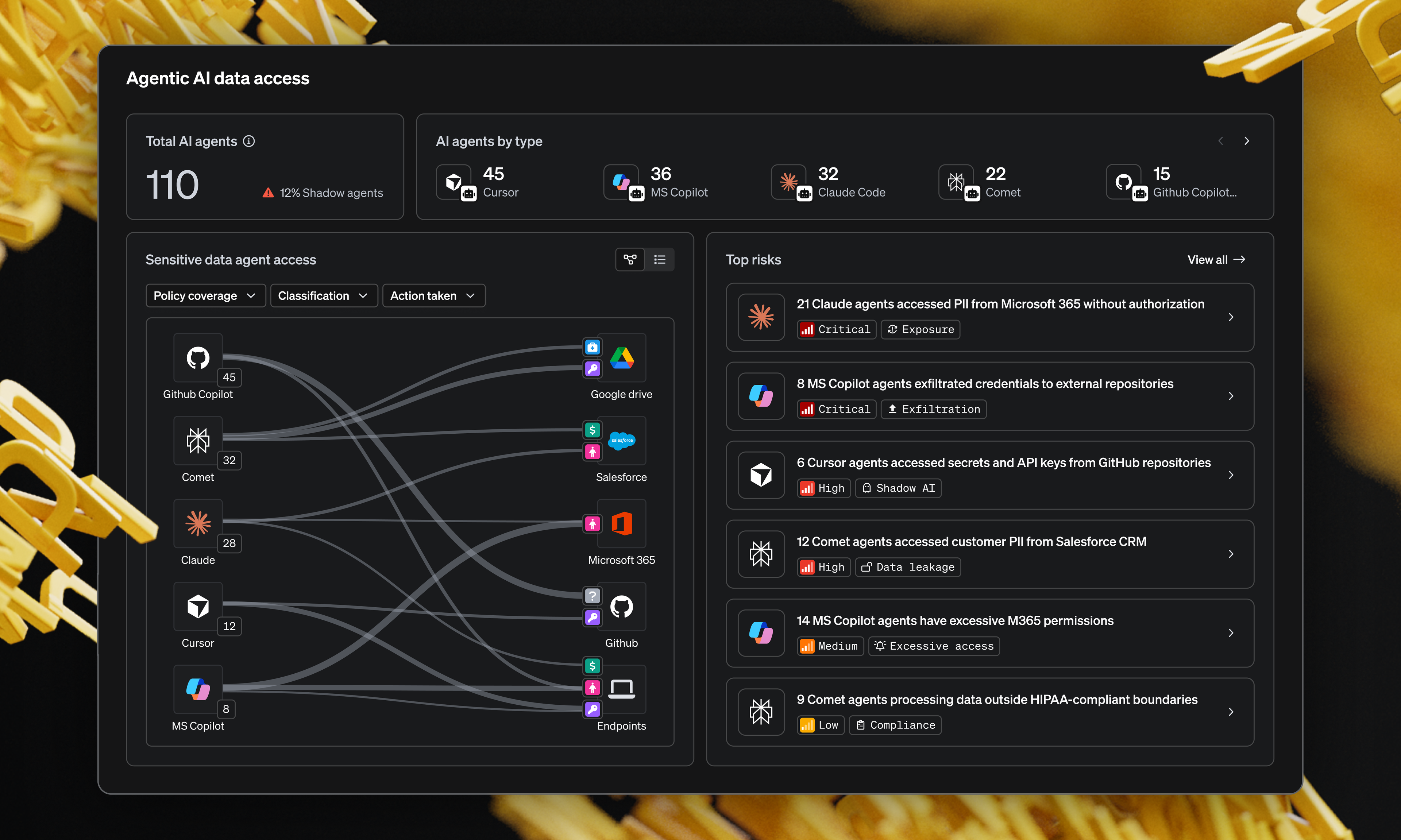

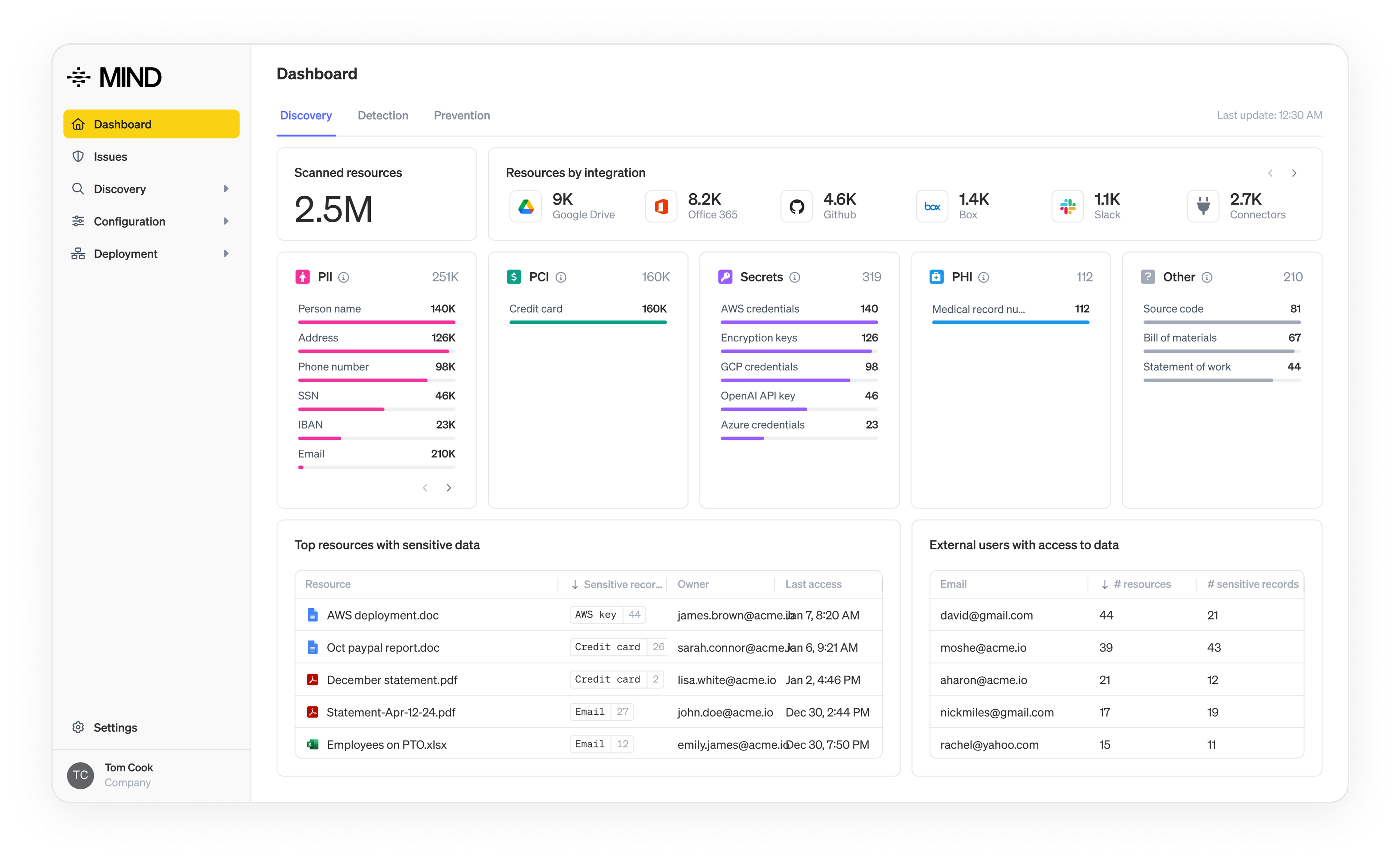

- How Do You Know What Data AI Agents Are Accessing?

You can’t secure what you can’t see. MIND continuously discovers and understands sensitive data across SaaS apps, endpoints and AI workflows so security teams know exactly what information AI agents are touching. - How Do You Know Which AI Agents Are Active in Your Environment?

Agentic AI shows up in many forms: SaaS features, homegrown agents and third-party tools. MIND provides visibility into where AI agents operate so organizations can reduce blind spots and unmanaged risk. - How Do You Build the Right Controls Between Data and AI?

MIND helps teams enforce context-aware controls that ensure data and AI interact safely. Policies are based on risk and intent, not static rules, allowing AI to operate while sensitive data stays protected.

The result is security that enables AI adoption instead of blocking it.

How do you mind what matters at AI speed?

Agentic AI isn’t slowing down. Neither is data movement.

With DLP at the speed of AI, MIND helps security teams stay ahead, protect what matters and adopt AI with confidence.

If your organization is embracing agentic AI, it’s time to rethink how DLP works.