From compliance to confidence: How autonomous DLP can restore security value

DLP promised to protect sensitive data and keep threats out without disrupting business. The reality has not lived up to this aspiration.

DLP promised to protect sensitive data and keep threats out without disrupting business. The reality has not lived up to this aspiration.

Data loss prevention (DLP) emerged in the early 2000s, a time dominated by email attachments, FTP transfers and on-premise file servers. Its initial goal was clear: prevent sensitive data from escaping from these established locations. Early solutions identified patterns such as credit card or social security numbers, issuing alerts or blocking outgoing transmissions. This was a sensible approach to the problems of that era.

But the world changed. Cloud applications exploded. SaaS became the norm. Users began working remotely, often from unmanaged devices. The data surface aggressively expanded and the context around data use became more complex. And all of this before the seismic shift that is Generative AI (GenAI).

Timeline of data security

- Early 2000s: DLP emerged with a focus on endpoint and network detection.

- 2010s: DLP diversified and consolidated, becoming an ecosystem including cloud access security brokers and classification tools.

- Late 2010s: Microsoft and Google integrated basic DLP features, making them more accessible but less configurable.

- 2020s: Remote work and SaaS sprawl led to accelerated consolidation among DLP vendors. Traditional DLP was augmented by specialized tools like CASB or DSPM.

- 2024: GenAI tools drive an explosion of unstructured sensitive data and risk

Security teams find themselves burdened with alert fatigue, policy sprawl and mounting complexity. Employees face friction, delays and blocked workflows. And executives, despite years of investment, still ask, “Are we any safer?”

At the heart of this disillusionment is a legacy model of DLP that hasn’t kept pace with how we work today. Data now flows freely across cloud platforms, AI tools, endpoints and third-party applications. Traditional DLP tools, built for static perimeters and simplistic pattern-matching, are no longer enough. What was once a useful compliance checkbox has become a costly anchor, dragging down both productivity and security.

The question isn’t whether DLP is necessary – now we must ask, is it working? And for many, the answer is clear: it’s not.

The 5 core pains of legacy DLP

1

Inaccurate detection

Legacy DLP systems were built around static rules and predefined patterns, searching for strings that resemble social security numbers, credit cards or other common identifiers. While this might have been effective two decades ago, today’s data environment is too dynamic and unstructured for simplistic pattern matching to be sufficient. Documents no longer reside solely in structured file shares. They're in ticketing system comments, SaaS platforms, screenshots and Slack messages. Sensitive information appears in formats and places that traditional tools simply can’t process.

This results in two fundamental failures: the failure to detect truly sensitive data and the failure to block actual risky activity. These systems are notorious for producing false positives, triggering alarms for harmless actions while missing actual leaks. Over time, security teams become desensitized. They either ignore alerts or spend hours sifting through false alarms, losing trust in the system and wasting valuable time. The ultimate danger? Real threats get buried in noise.

What’s needed is smarter detection, capable of recognizing business context, understanding data movement and differentiating between accidental exposure and malicious exfiltration. Without this evolution in detection, DLP will continue to be a liability rather than an asset.

2

Operational disruption

Security should enable productivity, not obstruct it. Yet, legacy DLP often disrupts legitimate workflows in the name of protection. Consider an employee trying to share a project plan with a vendor, only to be blocked by rigid rules that flag the file as sensitive without understanding the content or context. The result is confusion, frustration and workarounds that bypass security controls altogether.

This kind of disruption doesn’t just stall progress; it erodes trust between users and the security team. Employees begin to see DLP as a hurdle rather than a safeguard. Shadow IT grows as users turn to unsanctioned apps and tools to do their jobs without interference. Meanwhile, security teams are forced into a constant game of catch-up, trying to balance protection with usability.

What’s needed is a DLP that’s context-aware and adaptive, capable of enforcing policies with nuance and sensitivity to business processes. Rather than hard stops, it should provide soft interventions, like user coaching or contextual warnings, to guide behavior without derailing it. The goal isn’t just to stop bad actions, but to empower safe ones.

3

Policy management friction

Creating effective DLP policies is harder than it sounds. It requires translating abstract ideas like 'confidentiality' or 'proprietary data' into concrete, machine-readable rules. That burden often falls on stakeholders such as legal, HR and compliance professionals who know the risks but lack technical fluency. They’re limited by policy dropdowns, RegEx patterns and Boolean logic. The result is often vague or overbroad rules that don’t align with real-world behavior.

Security teams then spend their time translating, tuning and troubleshooting. When policies break, either blocking too much or too little, it is quickly seen as someone else's problem. Stakeholders lose confidence. Analysts lose hours rewriting logic. The system becomes a patchwork of exceptions and overrides.

What’s needed is a better bridge between intent and implementation for effective DLP. Intuitive policy creation tools are essential. These tools must focus on the primary areas where data loss can occur and be specific enough to protect sensitive data within its various contexts. Personalization is also key, allowing rules to be applied broadly while easily managing exceptions based on business requirements. Without these elements, policy friction will hinder progress.

4

High overhead

Running a legacy DLP program isn’t just complex, it’s expensive. Many older platforms require on-premises infrastructure, dedicated appliances or custom integrations to function properly. Deploying them takes months and maintaining them is an ongoing, resource-intensive program. Licensing models are rigid, support is costly and updates rarely align with the pace of business change.

Even when cloud extensions exist, they’re often bolt-ons like additional modules or connectors that lack cohesion. As organizations grow, so do the deployment headaches. Adding a new SaaS platform? Expect weeks of setup and testing. Changing a policy? Prepare for a cascade of configuration dependencies.

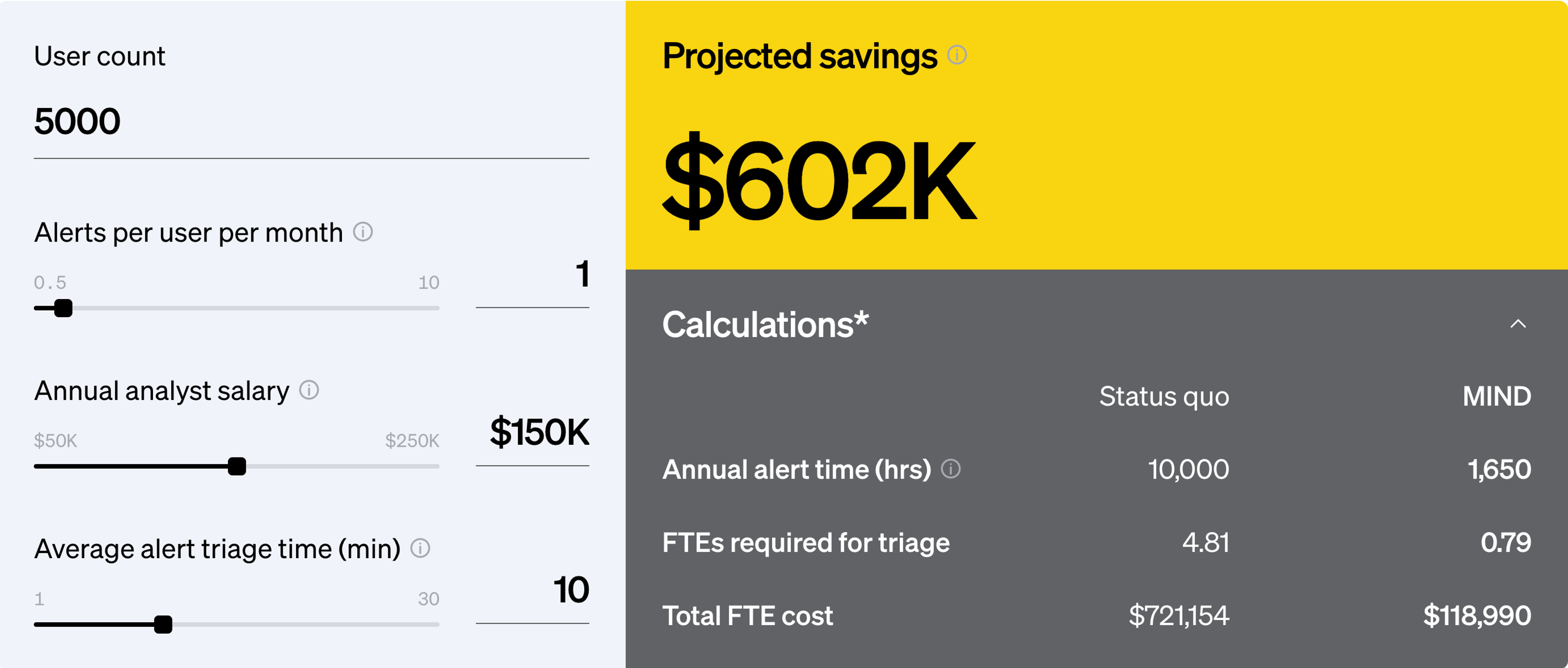

And then there’s the human cost. Security engineers are consumed by rule tuning, alert triage and system maintenance. Instead of focusing on strategic risk reduction, they’re trapped in reactive cycles. Organizations wind up hiring more staff just to keep the lights on, eroding ROI and inflating total cost of ownership.

What’s needed is a modern, cloud-native architecture that’s self-learning, auto-updating and easy to integrate. One that reduces overhead, not multiplies it. Without that, the cost of protection, and the staff required to achieve it, will continue to outweigh the benefits.

5

Low ROI

Despite the time, effort and expense invested, many organizations struggle to articulate what their DLP programs actually achieve. Are they safer? Has the risk gone down? Can they prove it? Too often, the answer is unclear. Legacy DLP tools focus on detection and logging, but rarely provide actionable insights or clear metrics.

The reason is simple: they were built to satisfy audit requirements, not drive outcomes. Reports are compliance-focused, not risk-based. They show counts and issues, not the reduction in exposure or incidents. This leaves CISOs in a tough spot, forced to justify budget for a DLP tool without concrete evidence of risk reduction or improvement.

Worse, because DLP is so noisy and high-friction, many teams end up drastically narrowing its scope or turning it off altogether. It becomes shelfware, running in silent mode to avoid disruption. This defeats the purpose entirely.

What’s needed is a modern DLP approach that aligns with measurable business value through real-time dashboards, risk scoring and reduction metrics. It should demonstrate not just coverage, but confidence, transforming DLP from a liability into a security force multiplier.

DLP has become a compliance checkbox

So why do legacy DLP systems persist, even in organizations that know they're ineffective? The answer lies in a quiet but powerful force: compliance frameworks. For many enterprises, DLP exists not as a proactive security tool, but as a reactive regulatory requirement. As long as the box is checked and the auditor is satisfied, the system is deemed good enough, even if everyone involved knows it’s not actually doing the job.

This mindset has shaped the evolution of the market. Vendors have long optimized their offerings to pass audits, not to stop breaches. Reports look impressive. Dashboards track compliance metrics. But under the hood, there’s little real data security. The tools exist to be defensible on paper, not effective in practice.

It’s a costly illusion. Executives believe they’ve bought a security tool and security teams believe they’ve implemented it. In reality, sensitive data is still vulnerable. The only thing that’s been protected is the company’s liability.

Compliance standards that require DLP

- Health Insurance Portability and Accountability Act (HIPAA)

mandates protection of personal health information (PHI) - Payment Card Industry Data Security Standard (PCI-DSS)

requires controls to prevent unauthorized access to cardholder data - Sarbanes-Oxley Act (SOX)

emphasizes internal controls over financial data, including data protection - General Data Protection Regulation (GDPR)

enforces strict data handling rules for personal data of EU citizens - California Consumer Privacy Act / California Privacy Rights Act (CCPA/CPRA)

requires safeguards for California residents' personal information - Gramm-Leach-Bliley Act (GLBA)

obligates financial institutions to protect sensitive customer information - Family Educational Rights and Privacy Act (FERPA)

protects student education records - Federal Information Security Management Act (FISMA)

requires federal agencies and contractors to implement strong data protection - ISO/IEC 27001 & 27701

internationally recognized standards for information security and privacy management - NIST 800-53 & 800-171

U.S. frameworks specifying security controls for federal and contractor systems

But a new generation of security leaders is calling this bluff. These are practitioners who want to do security, not just show security. They recognize that while compliance may keep you out of trouble, it doesn’t keep you safe. They want tools that help them make decisions, prioritize risks and act with precision.

They’re asking harder questions: Are we reducing data exposure? Can we prove that our controls are effective? Is our security posture improving month over month? And they’re frustrated by the answers legacy DLP gives them.

Legacy DLP isn’t just outdated, it’s out of step with modern leadership expectations. Today’s CISOs are expected to deliver results, not just readiness. To them, compliance is the bare minimum, not the goal. What they need is a DLP platform that supports their ambition.

Breaking free of the compliance checkbox means investing in tools that prioritize clarity, context and control. It means shifting from "how many alerts did we log" to "how much risk did we remove." It means building security that is visible, verifiable and valuable, beyond a line item on an audit report.

A new vision for data security

90%

of all enterprise data is unstructured

91%

of organizations say unstructured data is their biggest blindspot

The majority of enterprise data today is unstructured, and it's growing exponentially. In fact, unstructured data accounts for over 90% of all enterprise data, with a compound annual growth rate of roughly 55–65%. According to the ESG State of DLP report, 91% of organizations agree that unstructured data is their biggest data security blind spot. This includes emails, chat messages, documents, source code, screenshots and even AI-generated content. Unlike structured data that lives in databases or cloud infrastructure and follows predictable formats, unstructured data is diverse, dynamic and far more difficult to monitor.

Why is this happening? Digital transformation, collaboration tools, remote work and the rise of GenAI have all fueled the explosion of unstructured content. Every message sent in Slack, every comment in Google Docs, every file uploaded to Dropbox represents a potential vector for sensitive data movement. Yet traditional DLP tools weren’t designed to interpret this complexity. They lack the ability to understand the content, including specific language, intent and behavioral context, factors that are essential for securing modern work.

Addressing this challenge means rethinking how DLP works at a foundational level. To manage unstructured data risk, platforms must be able to see, understand and act in real time. This requires high-fidelity classification powered by machine learning, adaptive policy engines that respond to context and automation that remediates without disrupting workflows. Simply put, unstructured data can no longer be the exception. It must become the focus.

Modern DLP isn’t just about adding dashboards or integrating tools. It’s about delivering intelligent, coordinated security outcomes that match the complexity and velocity of today’s data landscape. The shift toward a unified, AI-driven platform marks a foundational evolution, from reactive rules and brittle integrations to coherent, adaptive systems that can learn, respond and scale.

A foundation of trust for autonomous DLP

It requires a high level of trust in your data security tools to embrace an autonomous data security future. There needs to be accuracy and consistency across 6 key areas before an organization can trust autonomous DLP.

1

Autonomous discovery

Without complete, accurate discovery, sensitive data remains hidden and vulnerable. Reliable discovery builds foundational trust, as organizations can’t protect what they can’t see or understand.

2

Accurate classification

Precise, context-aware classification ensures sensitive data is labeled correctly. Errors erode trust, creating risk and false positives that hinder adoption of autonomous data protection.

3

Advanced detection

Effective detection identifies threats in data at rest without overwhelming teams with noise. Confidence in detection accuracy is vital for trusting an autonomous platform’s ability to act.

4

Appropriate remediation

Remediation actions must be timely, targeted and correct. If responses are inconsistent or disruptive, organizations lose faith in automation and hesitate to enable full autonomy.

5

Automated policy management

Policies must be clear, enforceable and adaptive to change. Consistent policy execution gives organizations peace of mind and enables safe, trusted autonomous operation at scale.

6

Active prevention

Autonomous prevention must be reliable and non-intrusive. If prevention disrupts business or fails to block risks, organizations won’t trust the system to protect what matters.

This transition is happening now because the technology has finally caught up to the need. Advances in machine learning, natural language processing and cloud-native architectures allow platforms to analyze data at scale, recognize subtle behavioral cues and classify with surgical precision. These systems can process signals from endpoints, collaboration tools and cloud services, turning them into useful data points that are enriched with business context.

In order to keep pace with data security risk, it’s time to leverage the power of these advances to deploy security tools that help manage the volume and complexity of unstructured data security today. It means autonomy for our DLP tools. To effectively keep data secure, autonomous DLP needs a single analytical engine, a consistent policy model and a shared data backbone. It means writing a policy once and enforcing it everywhere, at rest and in motion, dynamically and intelligently.

A platform that truly understands data context, user intent and application behavior can do more than alert. It can take meaningful action, coaching a user in the moment, quarantining a file, escalating to a human only when needed. This moves DLP from observation to orchestration and from manual to autonomous.

With the heavy lifting handled by the platform, security teams can redirect their efforts toward business alignment, proactive risk management and innovation. Instead of managing tools, they manage outcomes. Instead of asking “what happened,” they ask “how can we improve.”

The result is a data protection posture that scales with the business, supports productivity and reinforces security as a strategic asset. This is the vision of modern, autonomous DLP: intelligent, agile and effective by design.

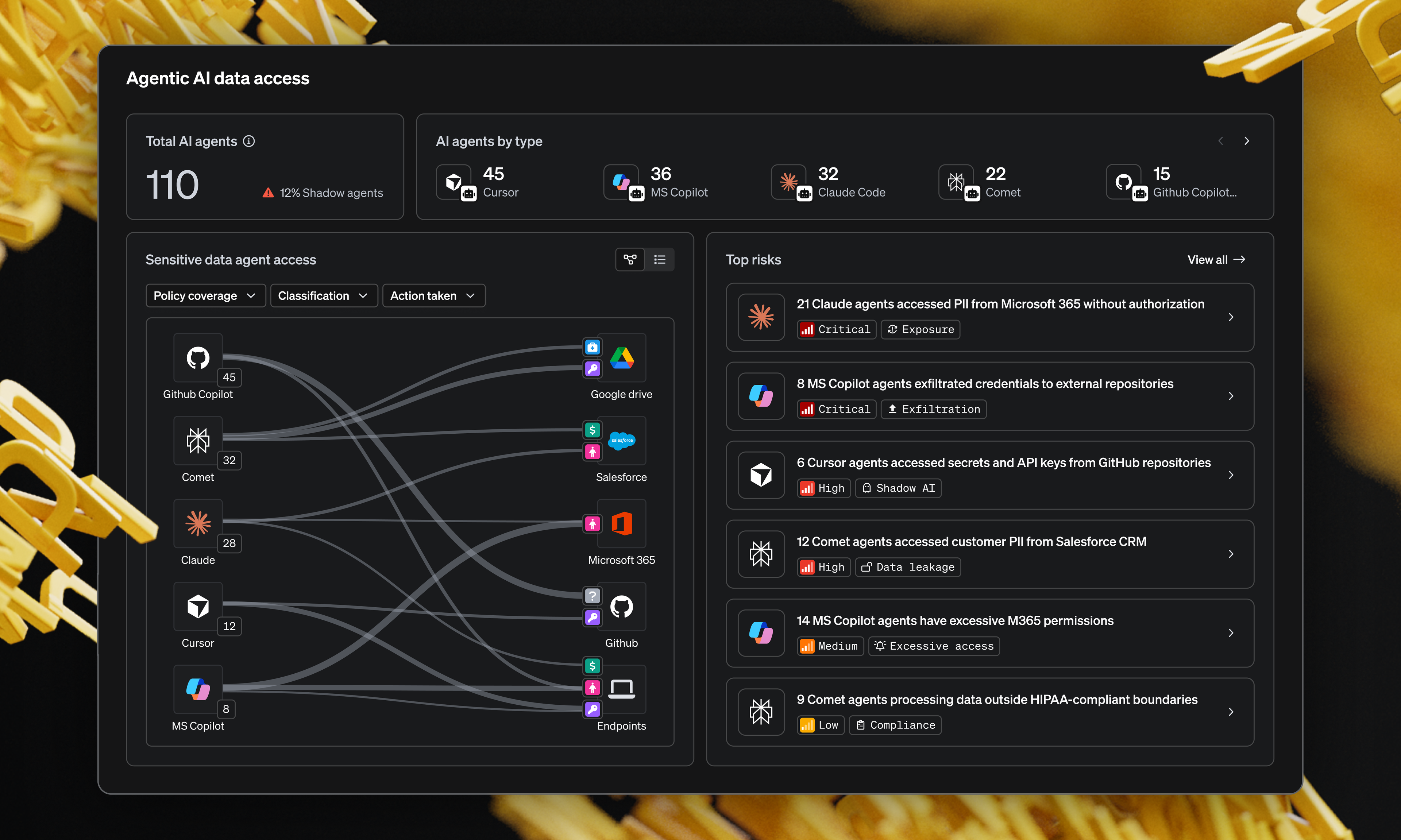

The GenAI challenge for data security

The rise of GenAI represents both an opportunity and a challenge for data security. Tools like ChatGPT, Gemini and Copilot are transforming productivity, but they’re also creating new avenues for data exposure. Employees can now move sensitive information across tools and into public or third-party platforms with a single prompt. Traditional DLP was never designed for this kind of interaction.

Security teams face a new layer of risk: the unintended sharing of confidential data through AI inputs or the generation of sensitive outputs that violate policy. The shift is subtle but significant. It’s no longer just about blocking downloads or scanning emails. It’s about understanding how data is used in creative, non-linear workflows and doing so without stifling innovation.

GenAI accelerates the urgency for autonomous DLP systems. Policies must now account for input fields, context windows and AI-generated content. Enforcement must be precise, dynamic and business-aware.

This moment underscores the need for a platform that doesn’t just monitor endpoints or files, but understands how data flows through language, through context and through human intent. DLP must now be capable of protecting data in conversation, whether that’s in Slack, in an API call or in a prompt to an LLM.

In this environment, static rules and fragmented coverage actively put organizations at risk. GenAI demands a shift from legacy logic to intelligent orchestration. From policy sprawl to platform-level insight. From reactive response to autonomous prevention.

This is not just another use case; it’s a turning point. And how we respond will shape the future of enterprise security.

What security teams really need

Security teams aren’t asking for another dashboard, another flood of alerts or another policy language to learn. What they need is functional clarity; a way to secure data without getting buried in complexity. At the heart of this need is a simple mandate: find the data, fix the issues and stop the bad things from happening. The more this process can happen autonomously, the more effective and resilient the program becomes.

Modern DLP must begin with discovery. Security teams need accurate, comprehensive visibility into where sensitive data lives, how it moves and who has access. This includes unstructured data, stored across devices, SaaS apps, collaboration tools and cloud environments. Traditional methods of data classification, like static rules and manual tagging, are no longer sufficient. Today’s data environments demand intelligent, adaptive classification powered by AI.

This is where accuracy becomes non-negotiable. When data detection is noisy, teams either chase false positives or ignore real risks. Modern platforms must be able to distinguish between benign behavior and risky actions with high fidelity. That means analyzing user behavior, content, metadata and context in tandem. Accuracy is not just about reducing alerts, it’s about increasing trust. Security teams need to trust that when the DLP tool flags something, it’s for a reason. Precision is the foundation of action.

Once data is found and classified, the next step is fixing the issues, often before they turn into incidents. Security teams can’t keep up with a constant stream of manual interventions, so automation is the key to keeping pace. The system must be able to remediate policy violations in real time, such as revoking sharing permissions, enforcing file encryption or guiding users with contextual prompts. This automation must go beyond rules-based workflows. It must adapt to risk level, user role, business context and intent. Done well, automation transforms DLP from a reactive alert engine into a proactive guardian.

Finally, security must focus on prevention, the literal stopping of bad things from happening. This doesn’t mean blocking everything by default. It means having the intelligence to predict, prioritize and prevent when it matters most. This requires context: understanding not just the data, but who’s using it, how and why. Context allows for dynamic, risk-aware decisions, treating a file upload by a finance lead differently than the same action from a new contractor. It turns rigid policy enforcement into intelligent risk management.

Together, these core functions enable an autonomous DLP to find, fix and stop data security issues. But they must operate as one. Security cannot be stitched together from siloed tools with different interfaces, engines and policies. It needs to be unified, coherent and consistent.

When done right, this unification doesn’t just improve protection, it reduces friction. Teams gain time back. Users face fewer blocks. Policies are easier to manage and evolve. And leadership gains real visibility into impact. Security becomes not just operational, but strategic.

This is the transformation security teams are asking for. Not more alerts, more meetings or more dashboards. They want tools that do the work, quietly, reliably and autonomously. They want to spend their time solving problems, not proving that they’ve looked at them. They want to move from firefighting to forward-thinking.

That’s the promise of a modern, autonomous DLP platform, one that sees everything, understands what matters and acts with clarity. One that supports teams, scales with business and strengthens the foundation of trust in a digital enterprise.

The future according to MIND

Our customers don’t just connect MIND, they depend on us. They need to secure their most sensitive data without slowing down. And together, we are building towards a future where they can put their DLP and Insider Risk programs on autopilot. They see us not just as a tool, but as a partner in redefining what modern data protection can be.

95%

fewer false positives

91%

more accurate classification

From reactive to resilient

Data loss prevention doesn’t have to be a burden. It doesn’t have to be noisy, rigid or ineffective. And it certainly doesn’t have to be a checkbox. The legacy model of DLP has failed not because the mission was wrong, but because the methods were outdated.

The organizations that are winning today, the ones securing data without slowing down, are those that have embraced an autonomous DLP platform. They’ve replaced fragmentation with coherence, false positives with context and alert fatigue with clarity. Most importantly, they’ve moved from a culture of reaction to one of resilience.

With the right technology, security teams can finally get what they’ve always needed: the ability to find, fix and stop data loss with accuracy, automation and context. They can enable the business, empower users and earn the trust of leadership. They can stop chasing incidents and start driving impact.

MIND exists to support that shift. To help you reclaim confidence in your data protection strategy and rebuild DLP into something that actually delivers value. Because in today’s world, data is too important to be protected by yesterday’s tools.

With MIND, data protection doesn’t get in the way, it gets results.

70%

reduction in alert volume

83%

less time triaging alerts